In a bombshell for the SEO world, a leak on May 5, 2024, exposed the closely guarded secrets of Google's search rankings. Over 14,000 ranking factors were accidentally revealed from Google's internal documents, shedding light on how websites truly climb the search ladder. Validated by SEO experts, this leak confirmed the long-held suspicion of domain authority's power while surprising everyone with the weight Google places on clicks and even mentions of your brand online. But beyond the strategic shifts, the leak raises concerning questions about user privacy and the vast amount of data Google collects through Chrome. The article delves into the fallout of this leak, exploring how businesses can reshape their SEO strategies and the ethical implications of Google’s data practices.

May 5, 2024, marked the first-ever leak of the most comprehensive collection of Google Search API ranking factors in the history of search engines – a truly historical moment we might not have seen if Erfan Azimi, founder & CEO of an SEO agency, hadn't spotted Google's documents that were mistakenly released on Github on March 27, 2024, and were just forgotten to be deleted. The irony is that they were published under the Apache 2.0 license, allowing anyone accessing the documents to use, edit, and distribute them. As such, sharing the documents with two of the most reputable SEO experts, Rand Fishkin and Mike King – the next step Erfan Azimi took after spotting the leak – was within the legal boundaries of the license. Both released the documents and accompanying analysis on May 27.

While dodging questions about the authenticity of the documents at first, Google eventually admitted the documents were genuine.

The 2,500+ leaked documents show 14,014 attributes (API features), or "ranking factors," from Google Search's Content Warehouse API. In other words, they show us what data Google actually collects rather than how Google interprets that data.

The leaked information holds significant value for any company aiming to boost organic traffic and sales conversions from Google search marketing. It provides a unique insight into the factors influencing Google's search rankings, allowing businesses to reshape their SEO strategies accordingly.

On the other hand, the leak highlights Google's lack of transparency regarding how user data is collected and used in search algorithms and raises ethical and privacy concerns about the extent and implications of the search giant's data collection.

Understanding the Impact of the Google Search API Docs Leak on SEO: Key Discoveries & Expert Opinions

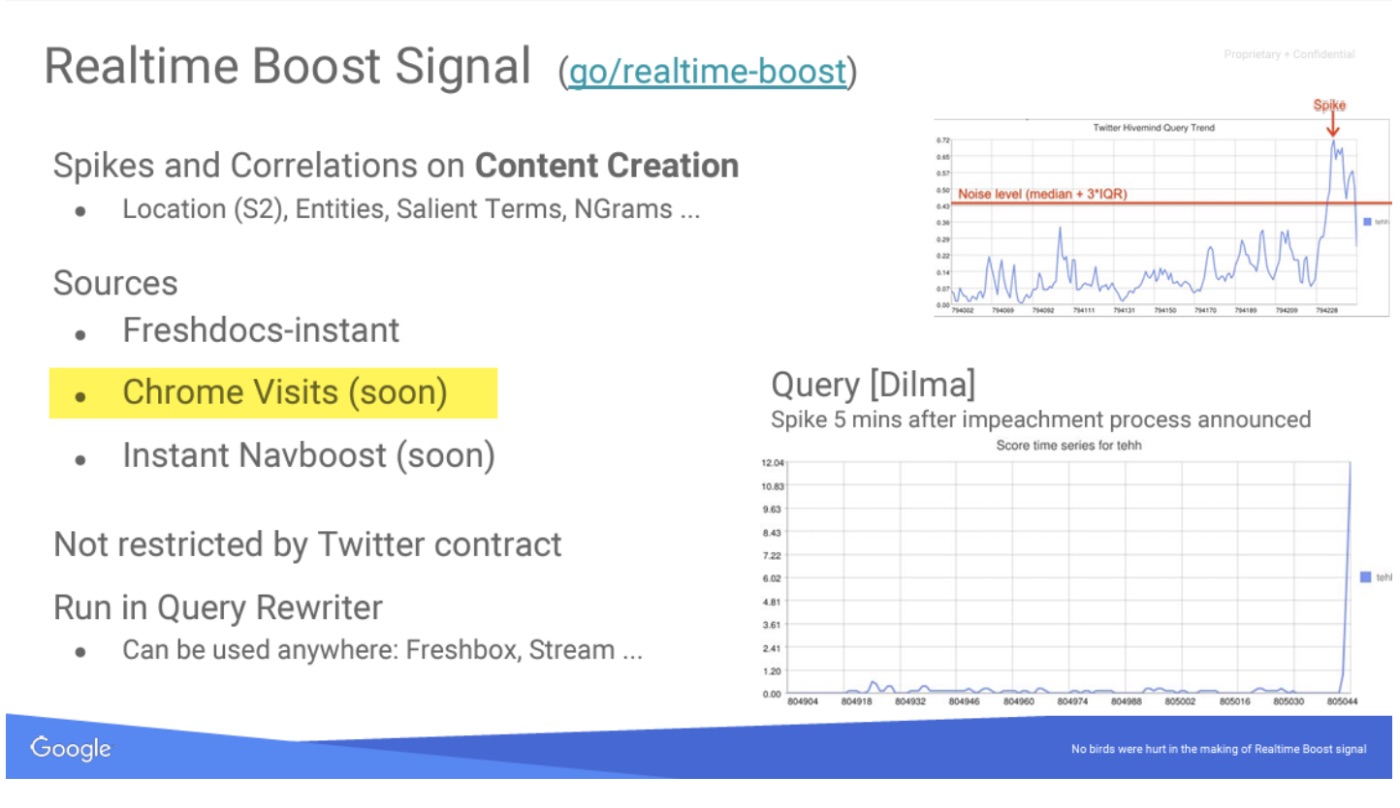

Navboost is one of Google's most vital ranking signals

Navboost is a Google ranking algorithm that was revealed during the company's antitrust trial with the U.S. Department of Justice. It enhances search results for navigation queries by utilizing various signals like user clicks to identify the most relevant outcomes. Navboost retains past clicks for queries up to 13 months old and differentiates results based on localization and device type (mobile or desktop). This ranking signal is crucial for SEO professionals to understand and optimize for, as it can significantly impact a website's visibility in search results.

Clicks are a primary ranking signal, indeed

Google has denied for years that clicks belong to a primary ranking factor. Its representatives, including Gary Illyes, have consistently emphasized that click-through rate (CTR) is a "very noisy signal" and that using clicks directly in rankings would be problematic due to the potential for manipulation. They have explained that while click data is used for evaluation and experimentation purposes to assess changes in the search algorithm, it is not a primary factor in determining search rankings.

The leaked documents prove otherwise. It does matter how many clicks a website can generate. The more on-page optimization and continuous content marketing you do, the more traffic you'll attract, resulting in more clicks, higher rankings, and higher conversion rates.

Domain Authority matters even though Google officials have always denied it

Google representatives have consistently misdirected and misled us about how their systems operate, aiming to influence SEO behavior. While their public statements may not be intentional lies, they are designed to deceive potential spammers—and many legitimate SEO professionals—by obscuring how search results can be impacted. Gary Ilyes, an analyst on the Google Search Team, has reiterated this point numerous times. He's not alone; John Mueller, Google's Senior Webmaster Trends Analyst, and Search Relations team lead, once stated they don't have a website authority score.

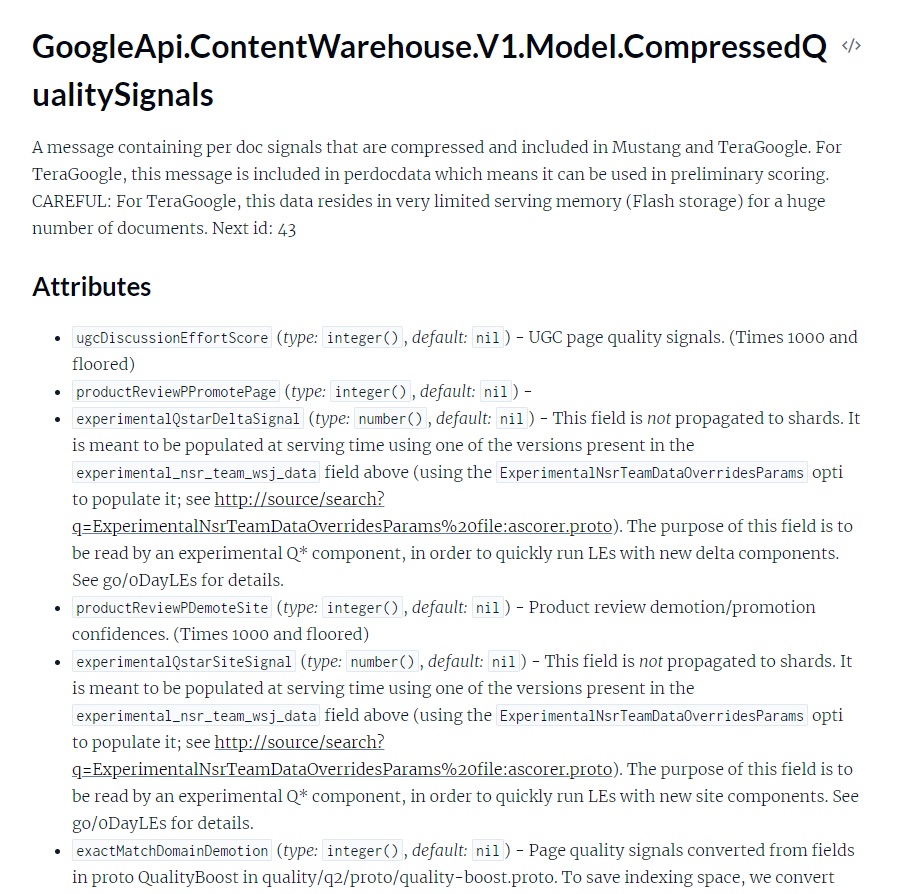

However, as the data leak suggests, Google does have an overall domain authority measure. As part of the Compressed Quality Signals stored on a per-document basis, Google computes a feature called "siteAuthority." According to Mike King, Founder and CEO of iPullRank, while this measure's specific computation and application in downstream scoring functions remain unclear, we now definitively know that Google's domain authority exists and is used in the Q* ranking system.

Google does white-list some sites and gives them preferential treatment in search results

The recent Google Search API leak revealed the existence of white lists that are used to ensure the quality and reliability of information, particularly for sensitive topics like health and news, where misinformation could have drastic implications on public well-being.

During critical times like the COVID-19 pandemic, Google used white lists to suppress misinformation and prioritize credible sources. This helped manage the spread of false information about the virus, treatments, and vaccines, ensuring users received accurate and trustworthy information.

Websites included in these white lists have demonstrated authority, credibility, and a consistent track record of providing reliable information. The criteria for inclusion are stringent, focusing on accuracy and reliability.

However, the use of white lists also raises concerns about transparency and fairness, as critics question the potential for bias and the selection criteria.

For SEO, this highlights the importance of building credibility and trustworthiness. Websites aiming to be included in white lists should focus on accurate reporting and adhere to high editorial standards, including clear correction policies and contact information.

Despite Google's caution against making assumptions based on the leaked information, the insights into white lists emphasize their role in curating information and maintaining the quality of search results. This underscores the importance of credibility, accuracy, and trust in the digital information landscape.

Site categories are limited in Google results

The leaked documents suggest that Google could be capping the presence of various site categories, such as company blogs, commercial sites, and personal websites, within the search results for specific queries. This approach aims to diversify the types of sources presented to users, ensuring a broader range of perspectives and reducing the dominance of any single type of site.

For instance, Google might decide that only a certain number of travel blogs or commercial travel sites should appear in the results for a given travel-related search query. This limitation helps balance the search results, providing users with a mix of information from different types of sources. It prevents search results from being overly saturated with one category, such as personal blogs or commercial sites, which might not always offer the most reliable or varied information.

This strategy highlights Google's commitment to delivering a diverse and balanced search experience. By controlling the mix of site types in search results, Google aims to enhance the quality and relevance of the information presented to users, ensuring they receive a well-rounded view of available content.

Mentions of entities can influence search rankings similarly to links

The Google Search API leak provided intriguing insights into how mentions of entities, such as names or companies, could influence search rankings similarly to traditional backlinks. Mentions refer to instances where a name or phrase is referenced across the web without necessarily being linked. These mentions can be an important signal to Google's algorithm, indicating the relevance and authority of a particular entity.

The leaked documents suggest that Google tracks these mentions and potentially uses them to assess the prominence and credibility of entities. For example, frequent mentions of a brand or individual across various reputable websites could positively impact their search rankings, much like how backlinks from authoritative sites boost SEO. This indicates that Google's algorithm considers not only direct links but also an entity's overall presence and discussion across the web.

Clickstream data from Chrome can potentially impact organic rankings through paid clicks but raises questions about privacy

Chrome clickstream data refers to the detailed records of user interactions and behaviors within the Google Chrome browser, including which links are clicked, how long users stay on a page, and their navigation paths. This data provides Google with a rich source of information about user preferences and behaviors, which can be leveraged to refine search algorithms and improve the relevance of search results.

For instance,

if many users click on a particular link and spend considerable time on that page, it signals to Google that the page is likely to be of high quality and relevance, thereby boosting its ranking in search results.

This discovery has profound implications for SEO strategies. It suggests that user engagement metrics captured through Chrome can significantly influence search rankings beyond traditional SEO practices like keyword optimization and backlink building. SEO professionals should focus on creating content that attracts clicks and retains user interest, ensuring fast load times, easy navigation, and valuable, engaging content. By enhancing the overall user experience, websites can leverage Chrome clickstream data to improve their visibility and performance in Google's search results.

However, this discovery also raises questions about the extent of user data collection and how it's used beyond just improving search results.

On top of that, understanding ranking factors like clickstream data could allow malicious actors to manipulate search results by artificially inflating clicks on certain websites. This could lead to users being exposed to misleading or harmful content and put privacy at huge risk.

Quality content promoted to the right audience always wins

Quality content and a well-established backlinks strategy can drive traffic and help increase web rankings. Mike King says: "After reviewing these features that give Google its advantages, it is quite obvious that making better content and promoting it to audiences that it resonates with will yield the best impact on those measures."

Significant drops in traffic can signal issues to Google's algorithms

While Google's official stance has often emphasized that traffic loss alone does not lead to penalties, the leaked documents and various SEO expert analyses suggest otherwise.

Significant drops in traffic can indeed signal issues with Google's algorithms. For example, the leaked documents highlighted concepts such as "content decay" and the "last good click," which imply that a consistent decline in traffic and user engagement can negatively impact rankings. This suggests that if a website's traffic drops substantially—say from 10K to 2K new users per month—Google's algorithm might interpret this as a decrease in content relevance or quality, potentially leading to a penalty or reduced visibility in search results.

Branded search holds significant value

The leaked documents also emphasize the importance of branded search, revealing that when users specifically search for a brand, it can significantly boost that brand’s ranking in Google’s search results. This underscores the value of building a strong brand presence and recognition. SEO strategies should, therefore, include efforts to enhance brand visibility and encourage direct brand searches. This can be achieved through consistent and quality content marketing and engaging with audiences across various platforms.

Mobile performance should be the top priority

Based on everything in these documents and a recent announcement about Google de-indexing websites that don't perform on mobile devices, we've got to take mobile performance seriously. If your site performs poorly on mobile, it will be de-indexed on July 5, 2024.

Google Search API Leak Implications on Digital Marketing Strategy

Reflecting on these revelations, several strategic adjustments are evident. Firstly, there is the concept of balancing the zero-click mentality. Previously, our emails provided all the necessary information to avoid extra clicks. However, with the understanding that Chrome clickstream data can impact rankings, we might adjust our email strategy to encourage clicks to our blog posts. This shift ensures that user interactions with our site are captured, potentially boosting our search visibility.

Another strategic pivot involves focusing on high-traffic links. The emphasis now is on securing backlinks from high-traffic, reputable sources rather than numerous smaller ones. High-traffic sources are more likely to be recognized by Google's algorithms as indicators of credibility and relevance, thus positively influencing our rankings.

Creating demand for visual content is also crucial. Producing engaging videos and images can bias search results favorably. Visual content tends to attract more user interaction and longer engagement times – valuable metrics captured by Chrome clickstream data.

Reevaluating outlinking practices has also become necessary. Previously considered a positive SEO signal, outlinking is now understood to be tied to spam scores. This discovery requires us to reassess the value of outlinking and adjust our practices to avoid potential penalties.

Finally, the focus on mentions over links is emerging as a new strategy. Prioritizing mentions of your brand and key entities in high-quality content across the web can be as impactful as traditional link-building. This approach leverages Google's recognition of entity mentions in their ranking algorithms.

In summary, the insights from the Google Search API leak highlight the importance of consistent and quality content creation and distribution, user engagement, and the nuanced role of various SEO practices like link building and branded search. Adjusting your strategies to align with these revelations can enhance your search rankings and overall digital presence. However, overrelying on this leak without considering the evolving nature of Google’s algorithm could be risky.

And what’s your take on this?

Don’t forget to check out my coverage of Google’s AI Overviews and their impact on digital marketing.