This paper is available on arxiv under CC 4.0 license.

Authors:

(1) Zhihang Ren, University of California, Berkeley and these authors contributed equally to this work (Email: peter.zhren@berkeley.edu);

(2) Jefferson Ortega, University of California, Berkeley and these authors contributed equally to this work (Email: jefferson_ortega@berkeley.edu);

(3) Yifan Wang, University of California, Berkeley and these authors contributed equally to this work (Email: wyf020803@berkeley.edu);

(4) Zhimin Chen, University of California, Berkeley (Email: zhimin@berkeley.edu);

(5) Yunhui Guo, University of Texas at Dallas (Email: yunhui.guo@utdallas.edu);

(6) Stella X. Yu, University of California, Berkeley and University of Michigan, Ann Arbor (Email: stellayu@umich.edu);

(7) David Whitney, University of California, Berkeley (Email: dwhitney@berkeley.edu).

Table of Links

- Abstract and Intro

- Related Wok

- VEATIC Dataset

- Experiments

- Discussion

- Conclusion

- More About Stimuli

- Annotation Details

- Outlier Processing

- Subject Agreement Across Videos

- Familiarity and Enjoyment Ratings and References

4. Experiments

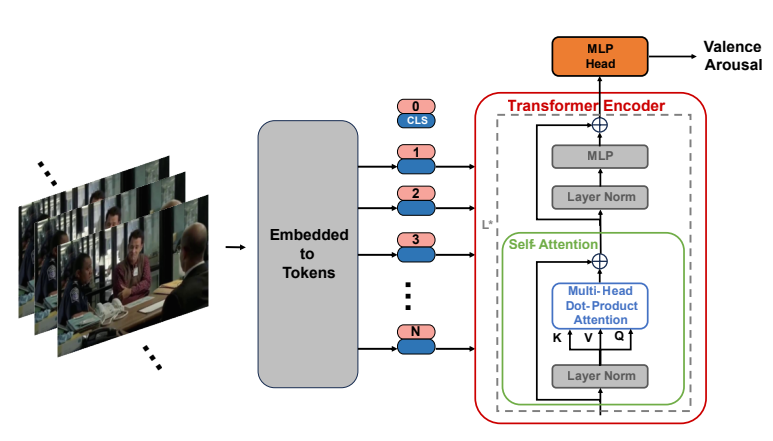

In this study, we propose a new emotion recognition in context task, i.e. to infer the valence and arousal of the selected character via both context and character information in each video frame. Here, we propose a simple baseline model to benchmark the new emotion recognition in context task. The pipeline of the model is shown in Figure 8. We adopted two simple submodules: a convolutional neural network (CNN) module for feature extraction and a visual transformer module for temporal information processing. The CNN module structure is adopted from Resnet50 [21]. Unlike CAER [33] and EMOTIC [32], where facial/character and context features are extracted separately and merged later, we directly encode the fully informed frame. For a single prediction, consecutive N video frames are encoded independently. Then, the feature vectors of consecutive frames are first position embedded and fed into the transformer encoder containing L sets of attention modules. At last, the prediction of arousal and valence is accomplished by a multilayer perceptron (MLP) head.

4.1. Loss Function and Training Setup

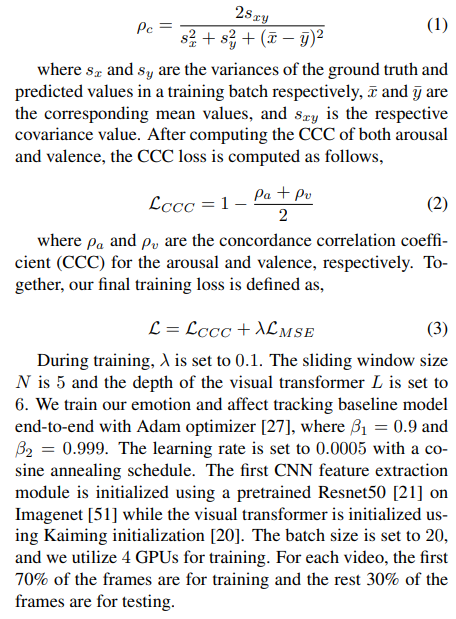

The loss function of our baseline model is a weighted combination of two separate losses. The MSE loss regularizes the local alignment of the ground truth of ratings and the model predictions. In order to guarantee the alignment of the ratings and predictions on a larger scale, such as learning the temporal statistics of the emotional ratings, we also utilize the concordance correlation coefficient (CCC) as a regularization. This coefficient is defined as follows,

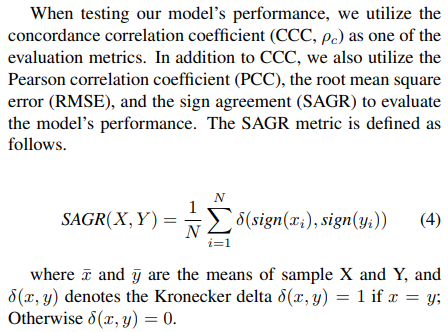

4.2. Evaluation Metrics

The SAGR measures how much the signs of the individual values of two vectors X and Y match. It takes on values in [0, 1], where 1 represents the complete agreement and 0 represents a complete contradiction. The SAGR metric can capture additional performance information than others. For example, given a valence ground truth of 0.2, predictions of 0.7 and -0.3 will lead to the same RMSE value. But clearly, 0.7 is better suited because it is a positive valence.

4.3. Benchmark Results

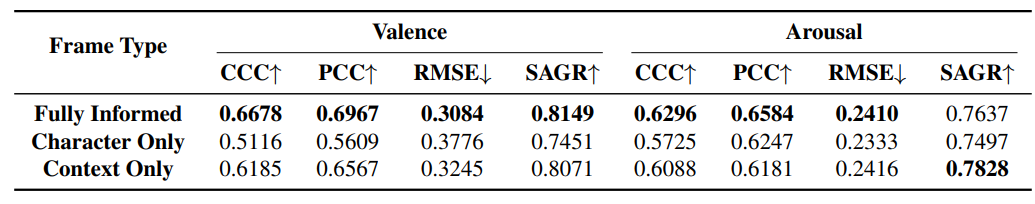

We benchmark the new emotion recognition in context task using the aforementioned 4 metrics, CCC, PCC, RMSE, and SAGR. Results are shown in Table 3. Compared to other datasets, our proposed simple method is on par with state-of-the-art methods on their datasets.

We also investigate the importance of context and character information in emotion recognition tasks by feeding the context-only and character-only frames into the pretrained model on fully-informed frames. In order to obtain fair comparisons and exclude the influence of frame pixel distribution differences, we also fine-tune the pretrained model on the context-only and character-only frames. The corresponding results are shown in Table 3 as well. Without full information, the model performances drop for both context-only and character-only conditions.

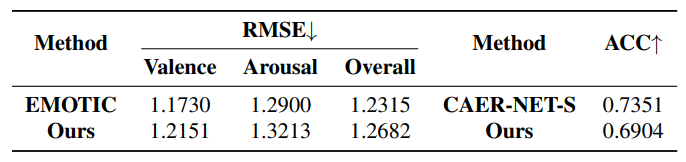

In order to show the effectiveness of the VEATIC dataset, we utilized our pretrained model on VEATIC, finetuned it on other datasets, and tested its performance. We only tested for EMOTIC [32] and CAER-S [33] given the simplicity of our model and the similarity of our model to the models proposed in other dataset papers. The results are shown in Table 4. Our pretrained model performs on par with the proposed methods in EMOTIC [32] and CAERS [33]. Thus, it shows the effectiveness of our proposed VEATIC dataset.

This paper is available on arxiv under CC 4.0 license.