Quick ChatPlus Rundown💬✨🤩

- ChatPlus is a progressive web app developed with React, NodeJS, Firebase, and other services.

- You can talk with all your friends in real-time 🗣️✨🧑🤝🧑❤️

- You can call your friends and have video and audio calls with them 🎥🔉🤩

- Send images to your friends and also audio messages and you have an AI that converts your speech to text whether you speak French, English, or Spanish 🤖✨

- The web app can be installed on any device and can receive notifications ⬇️🔔🎉

- I would appreciate your support so much, leave us a star on the Github repository and share with your friends ⭐✨

- Check out this GitHub repository for full installation and deployment documentation: https://github.com/aladinyo/ChatPlus

Web App Live Link

Visit ChatPlus (chatplus-a3d50.firebaseapp.com) and login with your google account, you can install the app by clicking on the arrow down button at the top of the home page, don't forget to accept notifications 📹🎦📞🚀.

Share it with your friends and have fun chatting with them🎈🎉😍

After using the app, you can delete your account if you want by clicking on the delete button at the top of the home page, though it would be sad seeing you leaving !!!!.

Note: The web app is very safe, and you can safely access it from the live link, and your data is encrypted by firebase and security rules are set, people won't be able to see your messages or calls with other users, people can only see your name and photo.

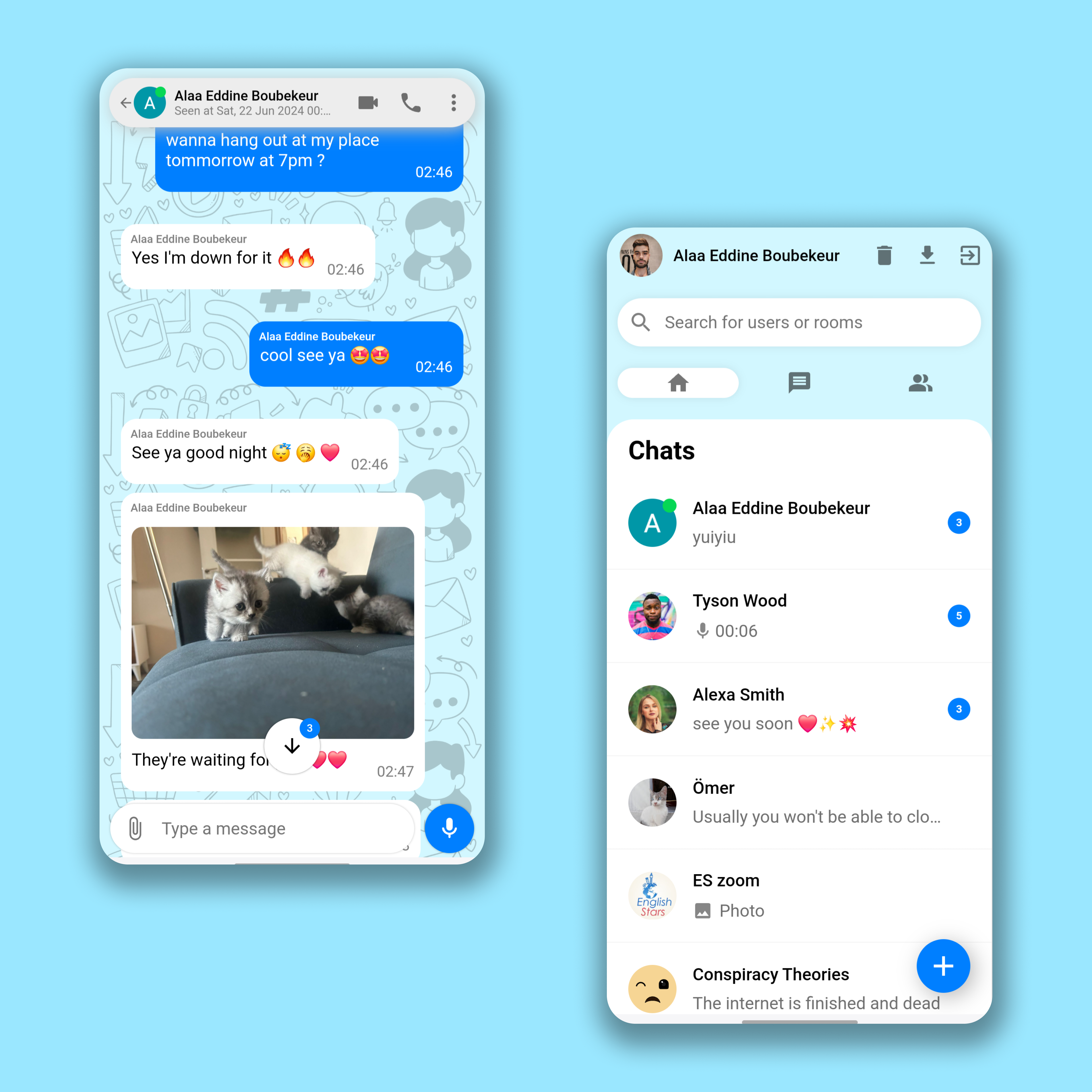

Clean Simple UI & UX

So What is ChatPlus ?

ChatPlus is one of the greatest applications I have ever made, it attracts attention with its vibrant user interface and multiple messaging and call functionalities which are all implemented on the web, it gives a cross-platform experience as its frontend is designed to be a PWA application which can be installed everywhere and act like a standalone app, with its features like push notifications, ChatPlus is the definition of a lightweight app that can give the full features of a mobile app with web technologies.

God Mode Activated

Before building this app, I decided to activate God mode in my brain and use every software engineering skill I have to build the best web app 😂😂😂. It looks like the plan has worked and this app is incredibly good and practical. It works so well that my family and friends started to use it to do video calls and talk to each other.

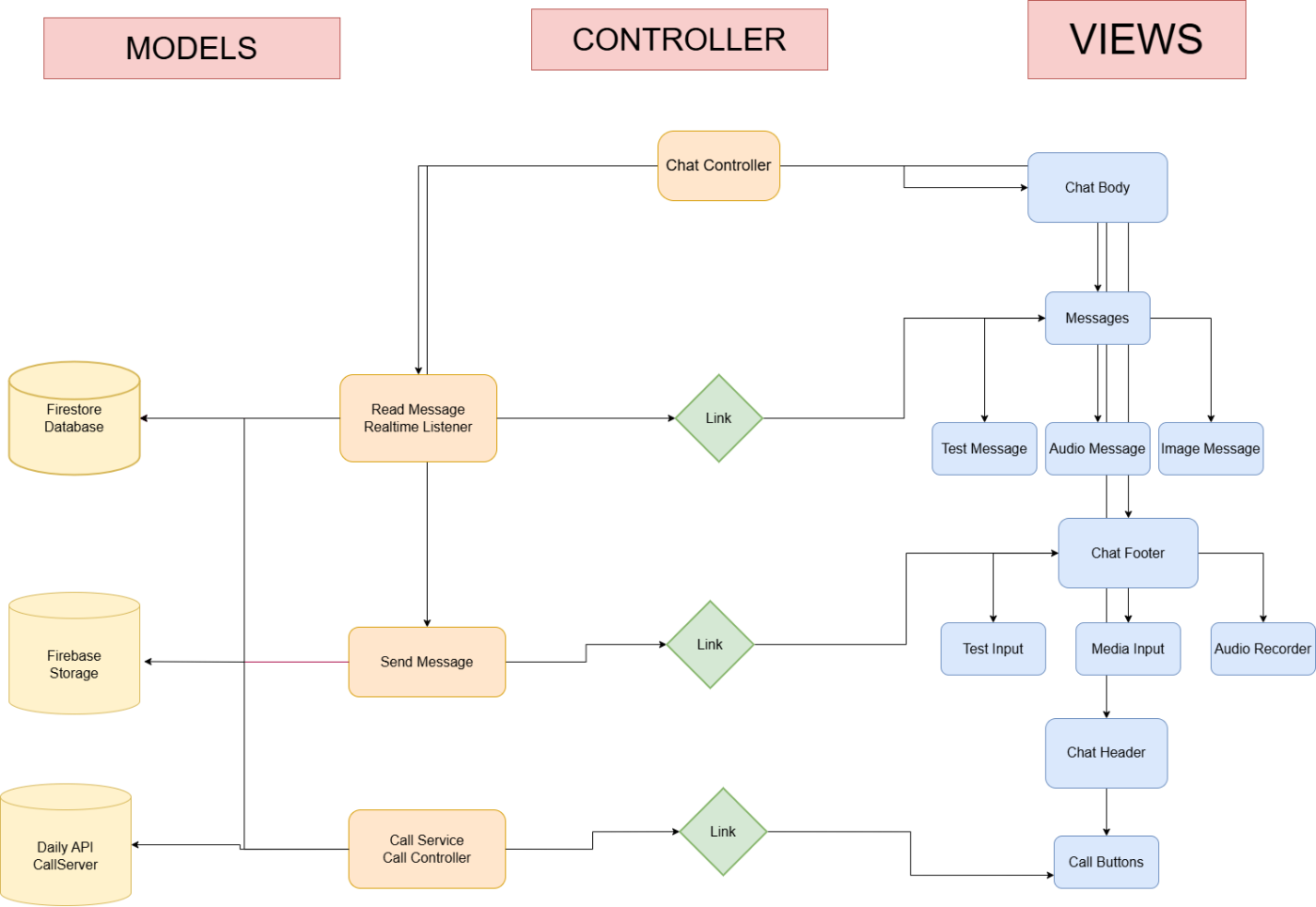

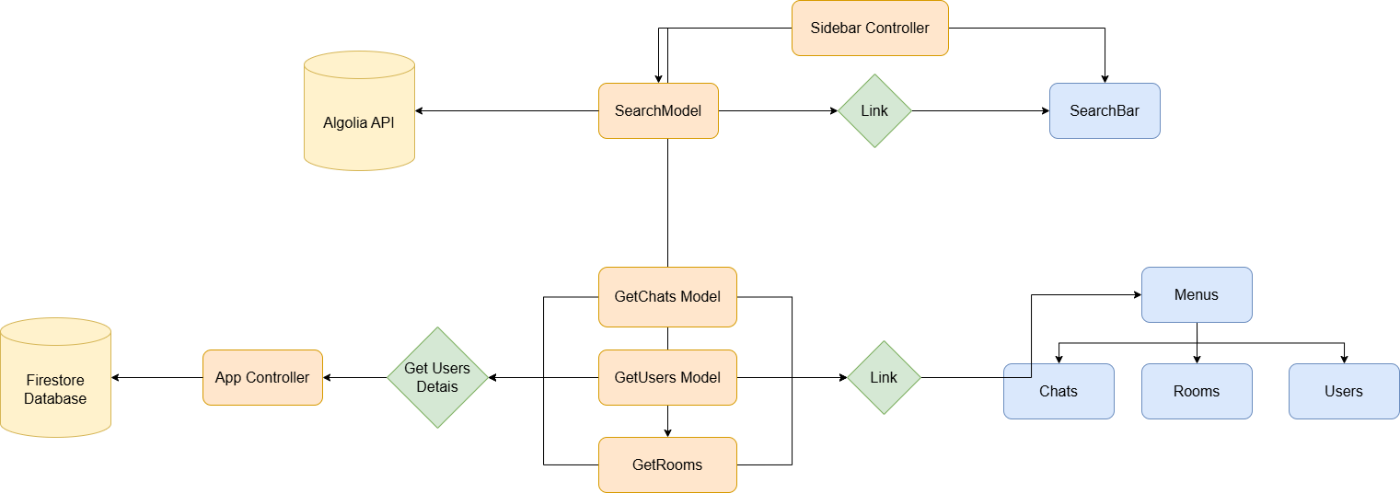

Software Architecture

My app follows the MVC software architecture, MVC (Model-View-Controller) is a pattern in software design commonly used to implement user interfaces, data, and controlling logic. It emphasizes a separation between the software's business logic and display. This "separation of concerns" provides for a better division of labor and improved maintenance.

User interface layer (View)

It consists of multiple React interface components:

- MaterialUI: Material UI is an open-source React component library that implements Google's Material Design. It's comprehensive and can be used in production out of the box, we’re gonna use it for icons buttons, and some elements in our interface like an audio slider.

- LoginView: A simple login view that allows the user to enter their username and password or login with Google.

- SidebarViews: multiple view components like ‘sidebar__header’, ‘sidebar__search’, ‘sidebar menu’, and ‘SidebarChats’, it consists of a header for user information, a search bar for searching users, a sidebar menu to navigate between your chats, your groups and users, and a SidebarChats component to display all your recently chats that you messaged, it shows the user name and photo and the last message.

- ChatViews: it consists of many components as follows:

- 1.‘chat__header’ contains the information of the user you’re talking to, his online status, and profile picture and it displays buttons for audio calls and video calls, it also displays when the the user is typing.

- 2.’chat__body--container’ contains the information of our messages with the other users it has a component of messages that displays text messages, images with their messages, and also audio messages with their information like audio time and whether the audio was played and the end of this component we have the ‘seen’ element that displays whether messages were seen.

- 3.‘AudioPlayer’: a React component that can display to us audio with a slider to navigate it, displays the full time and current time of the audio, this view component is loaded inside ‘chat__body-- container’.

- 4.‘ChatFooter’: it contains an input to type a message, a button to send a message when typing otherwise the button will allow you to record the audio, and a button to import images and files.

- 5.’MediaPreview’: a React component that allows us to preview the images or files we have selected to send in our chat, they are displayed on a carousel where users can slide the images or files and type a specific message for each one

- 6.‘ImagePreview’: When we have images sent on our chat this component will display the images on full screen with a smooth animation, the component mounts after clicking on an image.

- scalePage: a view function that increases the size of our web app when displayed on large screens like full HD screens and 4K screens.

- CallViews: a bunch of react components that contain all calls view elements, they can be dragged all over our screen and they consist of:

- 1 ‘Buttons’: a call button with a red version of it and a green video call button.

- 2 ‘AudioCallView’: a view component that allows answering incoming audio calls and displaying the call with a timer and it allows to cancel the call.

- 3 ‘StartVideoCallView’: a view component that displays a video of ourselves by connecting to the local MediaAPI and it waits for the other user to accept the call or, displays a button for us to answer an incoming video call.

- 4 ‘VideoCallView’: a view component that displays a video of us and the other user it allows to switch cameras, disable camera and audio, it can also go fullscreen.

- RouteViews: React components that contain all of our views to create local components navigation, we got ‘VideoCallRoute’, ‘SideBarMenuRoute’, and ‘ChatsRoute’

Client Side Models (Model)

Client-side models are the logic that allows our frontend to interact with databases and multiple local and serverside APIs and they consist of:

- Firebase SDK: It’s an SDK that is used to build the database of our web app.

- AppModel: A model that generates a user after authenticating and it also makes sure that we have the latest version of our web assets.

- ChatModels: it consists of model logic of sending messages to the database, establishing listeners to listen to new messages, and listening to whether the other user is online and whether he’s typing, it also sends our media like images and audio to the database storage.

- SidebarChatsModel: Logic that listens to the latest messages of users and gives us an array of all your new messages from users, it also gives several unread messages and the online status of users, it also organizes the users based on the time of the last message.

- UsersSearchModel: Logic that searches for users on our database, it uses Algolia search that has a list of our users by linking it to our database on the server

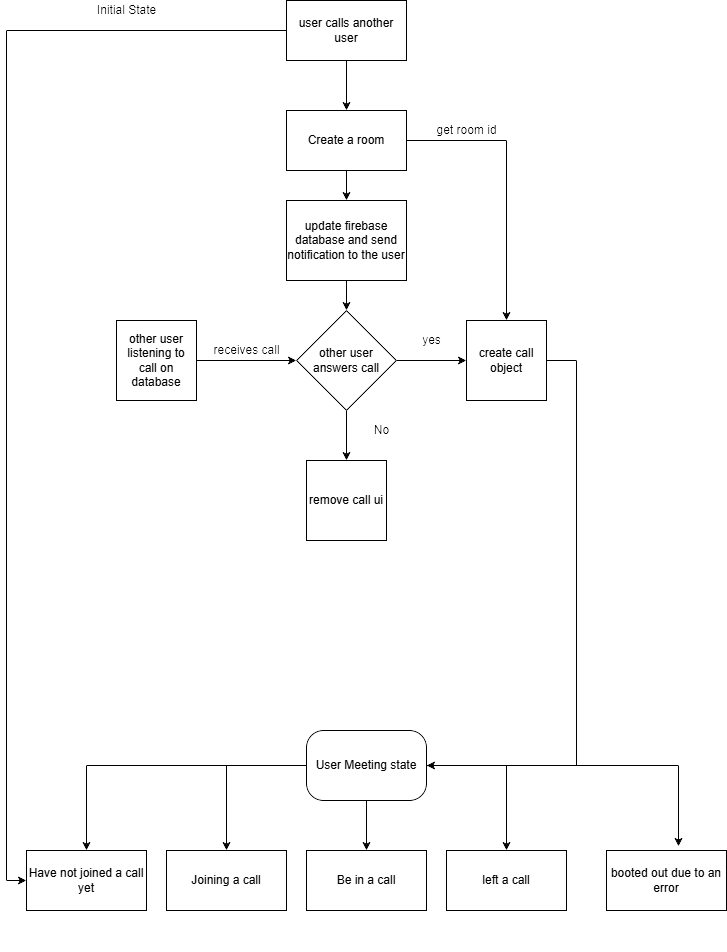

- CallModel: Logic that uses the Daily SDK to create a call on our web app and also send the data to our server and interacts with DailyAPI.

Client Side Controllers (Controller)

It consists of React components that link our views with the users’ specific models:

- App Controller: Links the authenticated user to all components and runs the scalePage function to adjust the size of our app, it also loads Firebase and attaches all the components, we can consider it a wrapper to our components.

- SideBarController: Link users’ data and list of their latest chats, it also links our menus with their model logic, and it also links the search bar with Algolia search API.

- ChatController: this is a very big controller that links most of the messaging and chat features.

- CallController: Links the call model with its views.

Server Side Model

Not all features are done on the frontend as the SDKs we used require some server-side functionalities and they consist of:

- CallServerModel: Logic that allows us to create rooms for calls by interacting with Daily API and updating our Firestore database.

- TranscriptModel: Logic on the server that receives an audio file and interacts with Google Cloud speech-to-text API and it gives a transcript for audio messages.

- Online Status Handler: A listener that listens to the online status of users and updates the databse accordingly.

- Notification Model: A service that sends notifications to other users.

- AlgoliaSaver: A listener that listens to new users on our database and updates Algolia accordingly so we can use it for the search feature on the frontend.

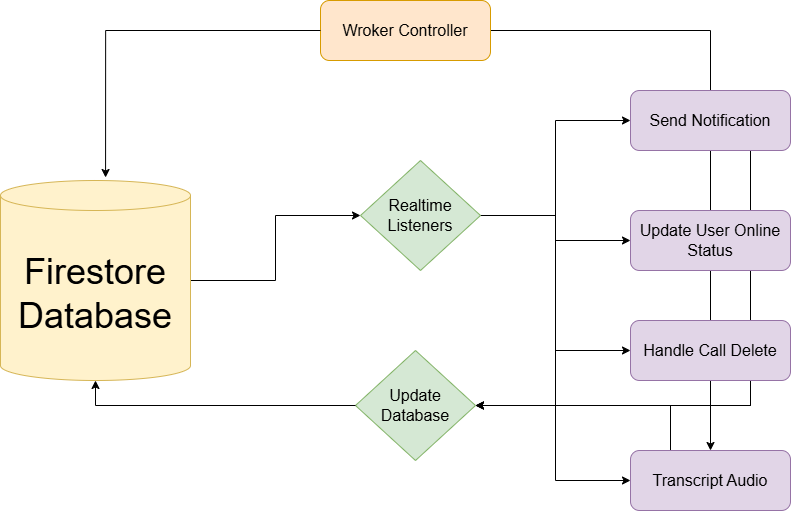

- Server Side Controllers: CallServer: an API endpoint that contains callModel, Worker: a worker service that runs all our firebase handling services.

Chat Flow Chart

Sidebar Flow Chart

Model Call Flow Chart:

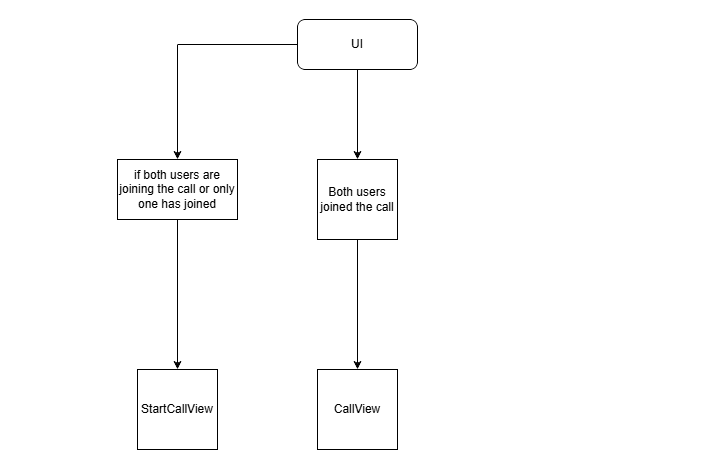

View Call Flow Chart

Backend Worker Flow Chart

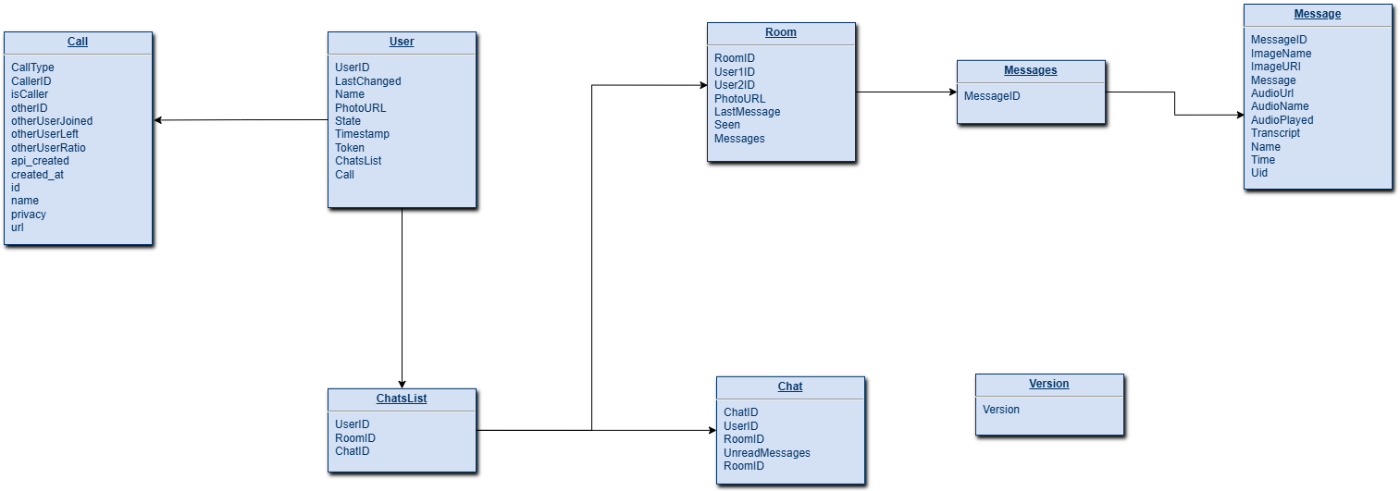

Database Design

Our Web app uses Firestore for storing our database which is a Firebase NoSQL database. We store users’ information, a list of all messages, and a list of chats. We also store chats in rooms.

Here’s what can be found in our database:

- Users Data after Authentication.

- Rooms that contain all the details of messages.

- List of latest chats for each user.

- List of notifications to be sent.

- List of audio to be transcripted.

Explaining the Magical Code 🔮

In the following chapters, I’m going to give a quick explanation and tutorials about certain functionalities in ChatPlus. I’ll show you the JS code and explain the algorithm behind it and also provide you with the right integration tool to link your code with the database.

Setting Up & Abstracting Firebase

Our web app uses Firebase as the BAAS for backend development, it is useful to abstract all of its functions into one module like the following:

import firebase from "firebase/compat/app";

import "firebase/compat/auth";

import "firebase/compat/firestore";

import "firebase/compat/database"

import "firebase/compat/messaging";

import "firebase/compat/storage"

import { firebaseConfig } from "./configKeys";

const firebaseApp = firebase.initializeApp(firebaseConfig);

const db = firebaseApp.firestore();

const runTransaction = db.runTransaction;

const db2 = firebaseApp.database();

const auth = firebaseApp.auth();

const provider = new firebase.auth.GoogleAuthProvider();

provider.setCustomParameters({ prompt: 'select_account' });

const createTimestamp = firebase.firestore.FieldValue.serverTimestamp;

const createTimestamp2 = firebase.database.ServerValue.TIMESTAMP;

const messaging = "serviceWorker" in navigator && "PushManager" in window ? firebase.messaging() : null;

const fieldIncrement = firebase.firestore.FieldValue.increment;

const arrayUnion = firebase.firestore.FieldValue.arrayUnion;

const storage = firebase.storage().ref("images");

const audioStorage = firebase.storage().ref("audios");

export { auth, provider, createTimestamp, messaging, fieldIncrement, arrayUnion, storage, audioStorage, db2, createTimestamp2, runTransaction };

export default db;

Handling Online Status

The online status of users was implemented by using Firebase database connectivity feature by connecting to the “.info/connected“ on the frontend and updating both Firestore and database accordingly:

var disconnectRef;

function setOnlineStatus(uid) {

try {

console.log("setting up online status");

const isOfflineForDatabase = {

state: 'offline',

last_changed: createTimestamp2,

id: uid,

};

const isOnlineForDatabase = {

state: 'online',

last_changed: createTimestamp2,

id: uid

};

const userStatusFirestoreRef = db.collection("users").doc(uid);

const userStatusDatabaseRef = db2.ref('/status/' + uid);

// Firestore uses a different server timestamp value, so we'll

// create two more constants for Firestore state.

const isOfflineForFirestore = {

state: 'offline',

last_changed: createTimestamp(),

};

const isOnlineForFirestore = {

state: 'online',

last_changed: createTimestamp(),

};

disconnectRef = db2.ref('.info/connected').on('value', function (snapshot) {

console.log("listening to database connected info")

if (snapshot.val() === false) {

// Instead of simply returning, we'll also set Firestore's state

// to 'offline'. This ensures that our Firestore cache is aware

// of the switch to 'offline.'

userStatusFirestoreRef.set(isOfflineForFirestore, { merge: true });

return;

};

userStatusDatabaseRef.onDisconnect().set(isOfflineForDatabase).then(function () {

userStatusDatabaseRef.set(isOnlineForDatabase);

// We'll also add Firestore set here for when we come online.

userStatusFirestoreRef.set(isOnlineForFirestore, { merge: true });

});

});

} catch (error) {

console.log("error setting onlins status: ", error);

}

};

On our backend we also set up a listener that listens to changes to our database and updates Firestore accordingly. This function can also give us the online status of the user in real time so we can run other functions inside it as well:

async function handleOnlineStatus(data, event) {

try {

console.log("setting online status with event: ", event);

// Get the data written to Realtime Database

const eventStatus = data.val();

// Then use other event data to create a reference to the

// corresponding Firestore document.

const userStatusFirestoreRef = db.doc(`users/${eventStatus.id}`);

// It is likely that the Realtime Database change that triggered

// this event has already been overwritten by a fast change in

// online / offline status, so we'll re-read the current data

// and compare the timestamps.

const statusSnapshot = await data.ref.once('value');

const status = statusSnapshot.val();

// If the current timestamp for this data is newer than

// the data that triggered this event, we exit this function.

if (eventStatus.state === "online") {

console.log("event status: ", eventStatus)

console.log("status: ", status)

}

if (status.last_changed <= eventStatus.last_changed) {

// Otherwise, we convert the last_changed field to a Date

eventStatus.last_changed = new Date(eventStatus.last_changed);

//handle the call delete

handleCallDelete(eventStatus);

// ... and write it to Firestore.

await userStatusFirestoreRef.set(eventStatus, { merge: true });

console.log("user: " + eventStatus.id + " online status was succesfully updated with data: " + eventStatus.state);

} else {

console.log("next status timestamp is newer for user: ", eventStatus.id);

}

} catch (error) {

console.log("handle online status crashed with error :", error)

}

}

Notifications

Notifications are a great feature and they are implemented using Firebase messaging. On our frontend, if the user’s browser supports notifications then we configure it and retrieve the user’s firebase messaging token:

const configureNotif = (docID) => {

messaging.getToken().then((token) => {

console.log(token);

db.collection("users").doc(docID).set({

token: token

}, { merge: true })

}).catch(e => {

console.log(e.message);

db.collection("users").doc(docID).set({

token: ""

}, { merge: true });

});

}

Whenever a user sends a message, we add a notification to our database:

db.collection("notifications").set({

userID: user.uid,

title: user.displayName,

body: inputText,

photoURL: user.photoURL,

token: token,

});

and on our backend, we listen to the notifications collection and we use the Firebase messaging to send it to the user

let listening = false;

db.collection("notifications").onSnapshot(snap => {

if (!listening) {

console.log("listening for notifications...");

listening = true;

}

const docs = snap.docChanges();

if (docs.length > 0) {

docs.forEach(async change => {

if (change.type === "added") {

const data = change.doc.data();

if (data) {

const message = {

data: data,

token: data.token

};

await db.collection("notifications").doc(change.doc.id).delete();

try {

const response = await messaging.send(message);

console.log("notification successfully sent :", data);

} catch (error) {

console.log("error sending notification ", error);

};

};

};

});

};

});

AI Audio Transcription

Our web application allows users to send audio messages to each other, and one of its features is the ability to convert this audio to text for audio recorded in English, French, and Spanish. This feature was implemented with Google Cloud Speech to Text feature, Our backend listens to new transcripts added to Firestore and transcripts them then writes them into the database:

db.collection("transcripts").onSnapshot(snap => {

const docs = snap.docChanges();

if (docs.length > 0) {

docs.forEach(async change => {

if (change.type === "added") {

const data = change.doc.data();

if (data) {

db.collection("transcripts").doc(change.doc.id).delete();

try {

const text = await textToAudio(data.audioName, data.short, data.supportWebM);

const roomRef = db.collection("rooms").doc(data.roomID).collection("messages").doc(data.messageID);

db.runTransaction(async transaction => {

const roomDoc = await transaction.get(roomRef);

if (roomDoc.exists && !roomDoc.data()?.delete) {

transaction.update(roomRef, {

transcript: text

});

console.log("transcript added with text: ", text);

return;

} else {

console.log("room is deleted");

return;

}

})

db.collection("rooms").doc(data.roomID).collection("messages").doc(data.messageID).update({

transcript: text

});

} catch (error) {

console.log("error transcripting audio: ", error);

};

};

};

});

};

});

Obviously, your eyes are looking at that textToAudio function and you’re wondering how I made it, don’t worry I got you:

// Imports the Google Cloud client library

const speech = require('@google-cloud/speech').v1p1beta1;

const { gcsUriLink } = require("./configKeys")

// Creates a client

const client = new speech.SpeechClient({ keyFilename: "./audio_transcript.json" });

async function textToAudio(audioName, isShort) {

// The path to the remote LINEAR16 file

const gcsUri = gcsUriLink + "/audios/" + audioName;

// The audio file's encoding, sample rate in hertz, and BCP-47 language code

const audio = {

uri: gcsUri,

};

const config = {

encoding: "MP3",

sampleRateHertz: 48000,

languageCode: 'en-US',

alternativeLanguageCodes: ['es-ES', 'fr-FR']

};

console.log("audio config: ", config);

const request = {

audio: audio,

config: config,

};

// Detects speech in the audio file

if (isShort) {

const [response] = await client.recognize(request);

return response.results.map(result => result.alternatives[0].transcript).join('\n');

}

const [operation] = await client.longRunningRecognize(request);

const [response] = await operation.promise().catch(e => console.log("response promise error: ", e));

return response.results.map(result => result.alternatives[0].transcript).join('\n');

};

module.exports = textToAudio;

Video Call Feature

Our web app uses Daily API to implement real-time Web RTC connections, it allows users to make video calls to each other so first we setup a backend call server that has many API entry points to create and delete rooms in Daily:

const app = express();

app.use(cors());

app.use(express.json());

app.delete("/delete-call", async (req, res) => {

console.log("delete call data: ", req.body);

deleteCallFromUser(req.body.id1);

deleteCallFromUser(req.body.id2);

try {

fetch("https://api.daily.co/v1/rooms/" + req.body.roomName, {

headers: {

Authorization: `Bearer ${dailyApiKey}`,

"Content-Type": "application/json"

},

method: "DELETE"

});

} catch(e) {

console.log("error deleting room for call delete!!");

console.log(e);

}

res.status(200).send("delete-call success !!");

});

app.post("/create-room/:roomName", async (req, res) => {

var room = await fetch("https://api.daily.co/v1/rooms/", {

headers: {

Authorization: `Bearer ${dailyApiKey}`,

"Content-Type": "application/json"

},

method: "POST",

body: JSON.stringify({

name: req.params.roomName

})

});

room = await room.json();

console.log(room);

res.json(room);

});

app.delete("/delete-room/:roomName", async (req, res) => {

var deleteResponse = await fetch("https://api.daily.co/v1/rooms/" + req.params.roomName, {

headers: {

Authorization: `Bearer ${dailyApiKey}`,

"Content-Type": "application/json"

},

method: "DELETE"

});

deleteResponse = await deleteResponse.json();

console.log(deleteResponse);

res.json(deleteResponse);

})

app.listen(process.env.PORT || 7000, () => {

console.log("call server is running");

});

const deleteCallFromUser = userID => db.collection("users").doc(userID).collection("call").doc("call").delete();

Great, now it’s just time to create call rooms and use the daily JS SDK to connect to these rooms and send and receive data from them:

export default async function startVideoCall(dispatch, receiverQuery, userQuery, id, otherID, userName, otherUserName, sendNotif, userPhoto, otherPhoto, audio) {

var room = null;

const call = new DailyIframe.createCallObject();

const roomName = nanoid();

window.callDelete = {

id1: id,

id2: otherID,

roomName

}

dispatch({ type: "set_other_user_name", otherUserName });

console.log("audio: ", audio);

if (audio) {

dispatch({ type: "set_other_user_photo", photo: otherPhoto });

dispatch({ type: "set_call_type", callType: "audio" });

} else {

dispatch({ type: "set_other_user_photo", photo: null });

dispatch({ type: "set_call_type", callType: "video" });

}

dispatch({ type: "set_caller", caller: true });

dispatch({ type: "set_call", call });

dispatch({ type: "set_call_state", callState: "state_creating" });

try {

room = await createRoom(roomName);

console.log("created room: ", room);

dispatch({ type: "set_call_room", callRoom: room });

} catch (error) {

room = null;

console.log('Error creating room', error);

await call.destroy();

dispatch({ type: "set_call_room", callRoom: null });

dispatch({ type: "set_call", call: null });

dispatch({ type: "set_call_state", callState: "state_idle" });

window.callDelete = null;

//destroy the call object;

};

if (room) {

dispatch({ type: "set_call_state", callState: "state_joining" });

dispatch({ type: "set_call_queries", callQueries: { userQuery, receiverQuery } });

try {

await db.runTransaction(async transaction => {

console.log("runing transaction");

var userData = (await transaction.get(receiverQuery)).data();

//console.log("user data: ", userData);

if (!userData || !userData?.callerID || userData?.otherUserLeft) {

console.log("runing set");

transaction.set(receiverQuery, {

room,

callType: audio ? "audio" : "video",

isCaller: false,

otherUserLeft: false,

callerID: id,

otherID,

otherUserName: userName,

otherUserRatio: window.screen.width / window.screen.height,

photo: audio ? userPhoto : ""

});

transaction.set(userQuery, {

room,

callType: audio ? "audio" : "video",

isCaller: true,

otherUserLeft: false,

otherUserJoined: false,

callerID: id,

otherID

});

} else {

console.log('transaction failed');

throw userData;

}

});

if (sendNotif) {

sendNotif();

const notifTimeout = setInterval(() => {

sendNotif();

}, 1500);

dispatch({ type: "set_notif_tiemout", notifTimeout });

}

call.join({ url: room.url, videoSource: !audio });

} catch (userData) {

//delete the room we made

deleteRoom(roomName);

await call.destroy();

if (userData.otherID === id) {

console.log("you and the other user are calling each other at the same time");

joinCall(dispatch, receiverQuery, userQuery, userData.room, userName, audio ? userPhoto : "", userData.callType);

} else {

console.log("other user already in a call");

dispatch({ type: "set_call_room", callRoom: null });

dispatch({ type: "set_call", call: null });

dispatch({ type: "set_call_state", callState: "state_otherUser_calling" });

}

};

};

};

OtherUserQuery and UserQuery are just Firebase Firestore document paths, now the rest of the app has view components that react to the state changes that are triggered by this function above and our call UI elements will appear accordingly.

Movin the Call Element Around

This next function is the Magic that allows you to drag the Call element all over the page:

export function dragElement(elmnt, page) {

var pos1 = 0, pos2 = 0, pos3 = 0, pos4 = 0, top, left, prevTop = 0, prevLeft = 0, x, y, maxTop, maxLeft;

const widthRatio = page.width / window.innerWidth;

const heightRatio = page.height / window.innerHeight;

//clear element's mouse listeners

closeDragElement();

// setthe listener

elmnt.addEventListener("mousedown", dragMouseDown);

elmnt.addEventListener("touchstart", dragMouseDown, { passive: false });

function dragMouseDown(e) {

e = e || window.event;

// get the mouse cursor position at startup:

if (e.type === "touchstart") {

if (typeof(e.target.className) === "string") {

if (!e.target.className.includes("btn")) {

e.preventDefault();

}

} else if (!typeof(e.target.className) === "function") {

e.stopPropagation();

}

pos3 = e.touches[0].clientX * widthRatio;

pos4 = e.touches[0].clientY * heightRatio;

} else {

e.preventDefault();

pos3 = e.clientX * widthRatio;

pos4 = e.clientY * heightRatio;

};

maxTop = elmnt.offsetParent.offsetHeight - elmnt.offsetHeight;

maxLeft = elmnt.offsetParent.offsetWidth - elmnt.offsetWidth;

document.addEventListener("mouseup", closeDragElement);

document.addEventListener("touchend", closeDragElement, { passive: false });

// call a function whenever the cursor moves:

document.addEventListener("mousemove", elementDrag);

document.addEventListener("touchmove", elementDrag, { passive: false });

}

function elementDrag(e) {

e = e || window.event;

e.preventDefault();

// calculate the new cursor position:

if (e.type === "touchmove") {

x = e.touches[0].clientX * widthRatio;

y = e.touches[0].clientY * heightRatio;

} else {

e.preventDefault();

x = e.clientX * widthRatio;

y = e.clientY * heightRatio;

};

pos1 = pos3 - x;

pos2 = pos4 - y;

pos3 = x

pos4 = y;

// set the element's new position:

top = elmnt.offsetTop - pos2;

left = elmnt.offsetLeft - pos1;

//prevent the element from overflowing the viewport

if (top >= 0 && top <= maxTop) {

elmnt.style.top = top + "px";

} else if ((top > maxTop && pos4 < prevTop) || (top < 0 && pos4 > prevTop)) {

elmnt.style.top = top + "px";

};

if (left >= 0 && left <= maxLeft) {

elmnt.style.left = left + "px";

} else if ((left > maxLeft && pos3 < prevLeft) || (left < 0 && pos3 > prevLeft)) {

elmnt.style.left = left + "px";

};

prevTop = y; prevLeft = x;

}

function closeDragElement() {

// stop moving when mouse button is released:

document.removeEventListener("mouseup", closeDragElement);

document.removeEventListener("touchend", closeDragElement);

document.removeEventListener("mousemove", elementDrag);

document.removeEventListener("touchmove", elementDrag);

};

return function() {

elmnt.removeEventListener("mousedown", dragMouseDown);

elmnt.removeEventListener("touchstart", dragMouseDown);

closeDragElement();

};

};

Drag and Drop Images

You can drag and drop images on your chat and send them to the other user, this functionality is made possible by running this, “setSRC” and “setImage” are the state functions that trigger the appearance of the “ImagePreview” component:

useEffect(() => {

const dropArea = document.querySelector(".chat");

['dragenter', 'dragover', 'dragleave', 'drop'].forEach(eventName => {

dropArea.addEventListener(eventName, e => {

e.preventDefault();

e.stopPropagation();

}, false);

});

['dragenter', 'dragover'].forEach(eventName => {

dropArea.addEventListener(eventName, () => setShowDrag(true), false)

});

['dragleave', 'drop'].forEach(eventName => {

dropArea.addEventListener(eventName, () => setShowDrag(false), false)

});

dropArea.addEventListener('drop', e => {

if (window.navigator.onLine) {

if (e.dataTransfer?.files) {

const dropedFile = e.dataTransfer.files;

console.log("dropped file: ", dropedFile);

const { imageFiles, imagesSrc } = mediaIndexer(dropedFile);

setSRC(prevImages => [...prevImages, ...imagesSrc]);

setImage(prevFiles => [...prevFiles, ...imageFiles]);

setIsMedia("images_dropped");

};

};

}, false);

}, []);

The mediaIndexer is a simple function that indexes the blob of images that we provide to it:

function mediaIndexer(files) {

const imagesSrc = [];

const filesArray = Array.from(files);

filesArray.forEach((file, index) => {

imagesSrc[index] = URL.createObjectURL(file);

});

return { imagesSrc, imageFiles: filesArray };

}

Smooth Animated Scroll

function smoothScroll({ element, duration, fullScroll, exitFunction, halfScroll }) {

requestAnimationFrame(start => {

const isElementScrolledScreen = element.scrollHeight - element.scrollTop >= element.offsetHeight * 2;

const scrollAmount = fullScroll ? element.offsetHeight : element.scrollHeight - element.offsetHeight - element.scrollTop;

const initialScrollTop = fullScroll && isElementScrolledScreen && !halfScroll ? element.scrollHeight - element.offsetHeight * 2 : element.scrollTop;

requestAnimationFrame(function animate(time) {

let timeFraction = (time - start) / duration;

if (timeFraction > 1) timeFraction = 1;

element.scrollTop = initialScrollTop + timeFraction * scrollAmount;

if (timeFraction < 1) {

requestAnimationFrame(animate);

} else {

exitFunction && exitFunction();

}

});

});

};

Handling Media Upload Error

Sometimes users may experience issues when uploading media like images and audio. One of them is the loss of internet connection, or when a user closes the app while media is uploading to a server. In this case, we need to update the message that contained the media with an error state, this next function is run on the backend:

function handleMediaUploadError() {

var userMessageListeners = {}

var messagesListeners = {}

//listen to all the rooms

db.collection("rooms").onSnapshot(roomsSnap => {

roomsSnap.docChanges().forEach(roomChange => {

/*attach the messages listener only once, and that's why we need to check that change type is "added",

when rooms update, it's basically updates about the last message and we don't need that and

when this listener executes for the first time it whill run with change type of added*/

if (roomChange.type === "added") {

/*listen to the messages of each room and store the unsuscribe function to our messagesListeners object */

const messagesReference = db.collection("rooms").doc(roomChange.doc.id).collection("messages");

messagesListeners[roomChange.doc.id] = messagesReference.onSnapshot(messagesSnap => {

messagesSnap.docChanges().forEach(messageChange => {

/*When a message is added we check whether it had a media being uploaded

if you get any server error and your media was stuck on uploading then we can also

check whether the media is being uploaded because we also listen on "modified" change*/

if (messageChange.type === "added" || messageChange.type === "modified") {

const messageData = messageChange.doc.data();

const mediaType = messageData.imageUrl ? "image" : messageData.audioUrl ? "audio" : false;

if (mediaType) {

if ((messageData[mediaType + "Url"] === "uploading") && !userMessageListeners[messageChange.doc.id]) {

/*if we have a media loading we start listening to the online state of the user who sent the message and store

the unsubscribe function to userMessageListeners Object*/

userMessageListeners[messageChange.doc.id] = db.collection("users").doc(messageData.uid).onSnapshot(userSnap => {

const userStatus = userSnap.data();

/*if the user become offline we update uploading status to error this will trigger the messages listener

to give us another snapshot with type "modified" */

if (userStatus.state === "offline") {

console.log(`user ${userStatus.name} went offline so we're setting the ${mediaType}Url to error`);

messagesReference.doc(messageChange.doc.id).set({

[mediaType + "Url"]: "error"

}, { merge: true });

}

});

} else {

/*after our message was modified, we unsubscribe from the user listener whether we had an error

or media was successfully uploaded, if imageUrl isn't "uploading" than it's either a URL or "error"*/

if (userMessageListeners[messageChange.doc.id]) {

userMessageListeners[messageChange.doc.id]();

}

}

}

}

})

});

} else if (roomChange.type === "removed") {

/*when a room is deleted we stop listening to its messages changes */

if (messagesListeners[roomChange.doc.id]) {

messagesListeners[roomChange.doc.id]();

}

}

});

});

}

Sending Welcome Messages

When a new user signs up to ChatPlus you can set up the backend to send them welcome messages. These messages can direct them to you the owner of the web app. The users can respond to these messages, and you can interact with them. You can also set multiple welcome users so the user will receive different welcome messages from different users. For that we first set a backend function that allows us to send a message from one user to another one, this function runs a Firestore transaction because it needs to make sure that the user is not deleting his account:

async function sendWelcomeChat(welcomeUser, newUser, message) {

try {

await db.runTransaction(async transaction => {

const newUserData = (await transaction.get(db.collection("users").doc(newUser.id))).data();

if (newUserData?.name && !newUserData?.delete) {

console.log(`user ${newUserData.name} is not being deleted, sending message`);

const operations = [];

const roomInfo = {

lastMessage: message,

seen: false,

}

const roomID = newUser.id > welcomeUser.id ? newUser.id + welcomeUser.id : welcomeUser.id + newUser.id;

const messageToSend = {

name: welcomeUser.name,

message: message,

uid: welcomeUser.id,

timestamp: createTimestamp(),

time: new Date().toUTCString(),

}

operations.push(transaction.set(db.collection("rooms").doc(roomID), roomInfo, { merge: true }));

operations.push(transaction.set(db.collection("users").doc(newUser.id).collection("chats").doc(roomID), {

timestamp: createTimestamp(),

photoURL: welcomeUser.photoURL,

name: welcomeUser.name,

userID: welcomeUser.id,

unreadMessages: fieldIncrement(1),

}, { merge: true }));

/*db.collection("users").doc(user.id).collection("chats").doc(roomID).set({

timestamp: createTimestamp(),

photoURL: state.photoURL ? state.photoURL

name: state.name,

userID: state.userID

}, { merge: true });*/

operations.push(transaction.set(db.collection("rooms").doc(roomID).collection("messages").doc(), messageToSend, { merge: true }));

return Promise.all(operations);

} else {

throw `user ${newUserData.name} is deleting account`

};

});

console.log(`Successfully sent this message "${message}" to user: ${newUser.name}`);

} catch (error) {

console.log(`error sending this message "${message}" to user: ${newUser.name}`);

console.log(error);

};

};

At the same time, we created a function that allows you to set any user to welcome user or remove him from welcome users, this function is available in the Test class:

const Test = Class {

constructor() {

}

/*some functions*/

updateWelcomeUser = async (userID, isWelcomeChat) => {

try {

await db.collection("users").doc(userID).update({

welcomeChat: isWelcomeChat

});

console.log(`successfully set welcomeChat to ${isWelcomeChat} for user with ID: ${userID}`);

} catch (error) {

console.log(`error setting welcomeChat to ${isWelcomeChat} for user with ID: ${userID}`);

console.log(error);

}

}

};

const test = new Test();

test.updateWelcomeUser("ZA4564DSDFDF6DF45D6FD65", true);

Then we can set a function that sends multiple messages to the new user:

async function welcomeChatMessages(welcomeUser, newUser) {

const sendMessage = async message => await sendWelcomeChat(welcomeUser, newUser, message);

const githubRepoLink = "https://github.com/aladinyo/ChatPlus"

await sendMessage("Hello I'm the owner of the app, it's nice to meet you and I like seeing you using my app 🎉💥");

await wait(1000);

await sendMessage("I would appreciate seeing you leaving a star on this app's github repository ✨⭐, let's make the whole world see this masterpiece: " + githubRepoLink);

await wait(1000);

await sendMessage("You can text me here, I'll receive a notification and respond to you asap, you should also accept yours, let's chat and talk a bit 🧑💻🚀🔮");

await wait(1000);

await sendMessage("Or maybe you can go for a video call, nothing is better than direct communication 🤩🎥🎦");

}

async function wait(timeout) {

return new Promise((resolve) => {

setTimeout(() => {

resolve();

}, timeout);

});

};

After that we set up a listener to get an array of welcome users, and a listener that listens to any newly added user, the last listener will execute the welcomeChatMessages function on the newly added user with all the welcome users, you can set welcome messages for different users if you want, notice that on the last listener we ignore the first snapshot because we want to run tasks on the new users only:

var welcomeChatUsers = [];

db.collection("users").where("welcomeChat", "in", [true]).onSnapshot(snap => {

welcomeChatUsers = [];

snap.forEach(welcomeUser => {

welcomeChatUsers.push(welcomeUser.data());

});

console.log("welcome chat users: ", welcomeChatUsers);

});

var firstNewUserSnap = true;

db.collection("users").orderBy("name").orderBy("id").onSnapshot(snap => {

if (!firstNewUserSnap) {

snap.docChanges().forEach(userDocChange => {

if (userDocChange.type === "added") {

const newAddedUser = userDocChange.doc.data();

console.log(`user was added `, newAddedUser);

for (let welcomeUser of welcomeChatUsers) {

welcomeChatMessages(welcomeUser, newAddedUser);

};

}

});

} else {

firstNewUserSnap = false;

};

});

What I’ve Learned from Building this App

This app has taught me patience and consistency, it consists of 16000 lines of code That I wrote and debugged for 3 months, it also taught me to be an engineer of solutions and to always be solution-oriented, because I’ve had hundreds of problems and errors while developing this app, All I can say is that if you can imagine an app, you can build it!!!!!

The ChatPlus App: Full List of Features

- Text messages: Send and receive instant text messages.

- Images: Drag and drop images from the web or your device directly into your chat.

- Audio messages: Record and send audio messages with ease.

- Image preview: Click on an image in the chat, and it will smoothly animate to the center for a better view.

- Typing indicators: See when a user is typing or recording.

- Speech-to-text: Audio recordings in English, French, or Spanish are converted to text using AI.

- Conversation control: Delete conversations as needed.

- User search: Quickly find and connect with other users.

- Online status: See who's online at a glance.

- Unread messages: Easily identify unread messages.

- Read receipts: Know when your messages have been viewed.

- Chat navigation: Use the arrow button to scroll down chats and see unread messages.

- Video and audio calls: Make video or audio calls to other users.

- Call flexibility: Drag call elements around the screen, send messages, and interact with the app during a call.

- Full-screen video: Go full-screen during video calls.

- Mute and camera control: Mute your audio and stop your camera as needed.

- Message history: Fetch up to 30 messages in a chat and load more by scrolling up.

- Audio player: Sent audio messages appear with a grey slider, received messages in green, and played messages in blue. The audio player shows the full time and current playback time.

- Notifications: Receive notifications for new messages.

- Sound alerts: Hear distinct sounds for sent, received, and new messages.

- Web app installation: Click the arrow down button on the home page to install the web app on your device.

- Account management: Delete your account if necessary.

Conclusion

Thank you so much for making it this far in this article, I know it’s been tough because the web app is huge, and the code may confuse you. Don’t hesitate to contact me if you need help understanding the code. If you encounter any problem post it in the issues section of this app’s GitHub repo.

Get started here: https://github.com/aladinyo/ChatPlus and don’t forget to leave a star ✨⭐

Happy coding and Peace ✌️✌️.